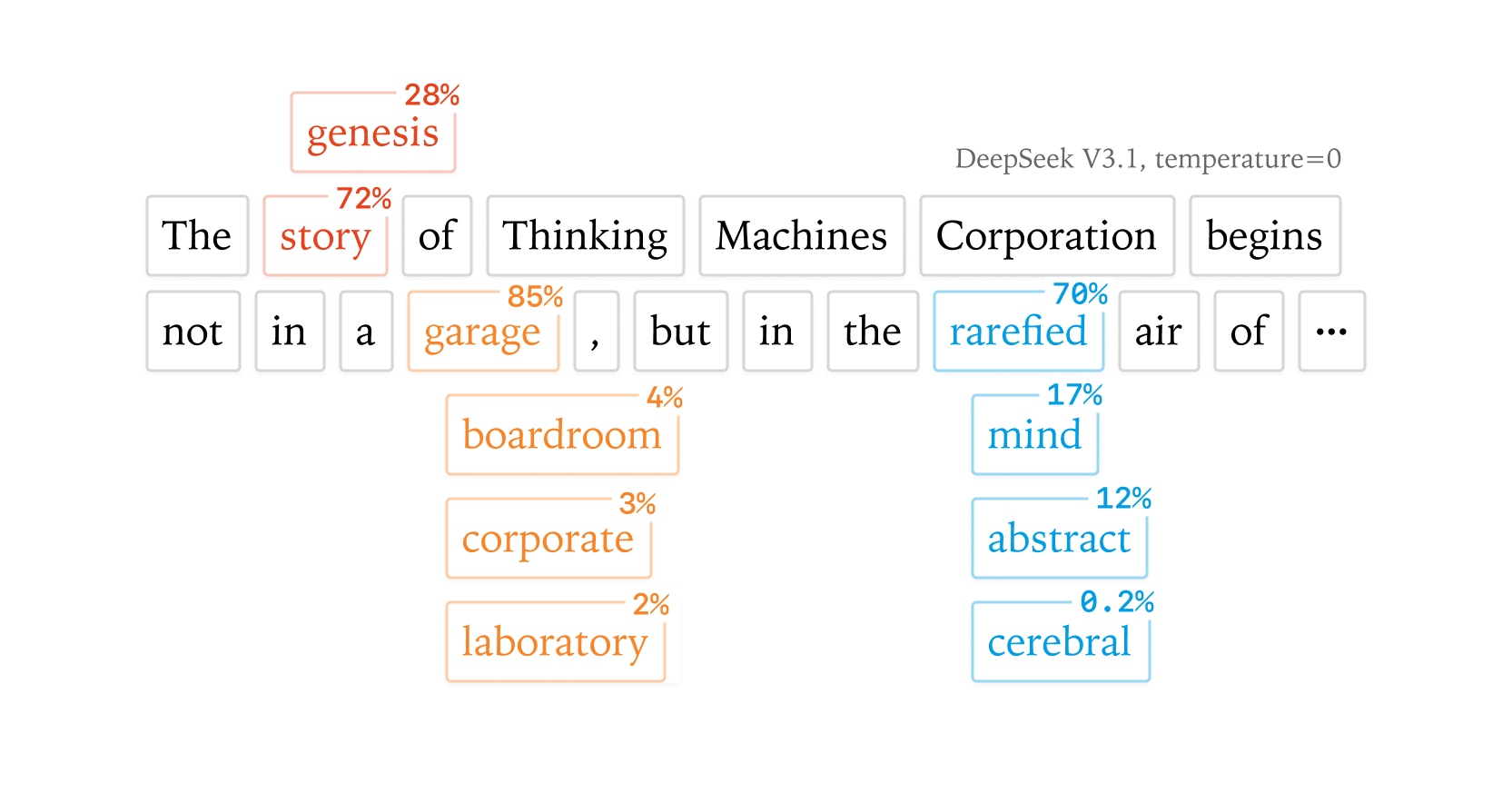

For example, you might observe that asking ChatGPT the same question multiple times provides different results. This by itself is not surprising, since getting a result from a language model involves “sampling”, a process that converts the language model’s output into a probability distribution and probabilistically selects a token.

What might be more surprising is that even when we adjust the temperature down to 0This means that the LLM always chooses the highest probability token, which is called greedy sampling. (thus making the sampling theoretically deterministic), LLM APIs are still not deterministic in practice (see past discussions here, here, or here). Even when running inference on your own hardware with an OSS inference library like vLLM or SGLang, sampling still isn’t deterministic (see here or here).

Recent Stories

Autonomous cars, drones cheerfully obey prompt injection by road sign

: AI vision systems can be very literal readers

Jan 31, 2026NVIDIA is still planning to make a ‘huge’ investment in OpenAI, CEO says

Bloomberg reports that CEO Jensen Huang said NVIDIA's investment in OpenAI could be the largest the company has ever made.

Jan 31, 2026AI Agent Engineer at CollectWise

About Us CollectWise is a fast growing and well funded Y Combinator-backed startup. We’re using generative AI to automate debt collection, a $35B market in the US alone. Our AI agents are already outperforming human collectors by 2X, and we’re doing so at a fraction of the cost. With a team of three, we scaled to a $1 million annualized run rate in just a few months, and we are now hiring an AI Agent Engineer to help us reach $10 million within the next year. Role We are hiring an AI Agent Engineer to design, optimize, and productionize the...